R&D Focus

Addressing Sensor Reliability Through Environment-Based Multi-Sensor Fusion By Peter Chondro

With the advent of semiconductor integrated circuits (ICs), the annual sales of silicon-based circuits have skyrocketed to approximately US$439 billion in 2020 [1]. This growth has led to the widespread application of more powerful yet compact circuits in fields such as advanced robotics and notably autonomous vehicles (AVs). The deployment of machine learning in AVs is accommodated by current-generation computing platforms such as NVidia DRIVE or Qualcomm Snapdragon Ride. This emerging technology is expected to generate additional revenue of up to US$1.5 trillion for the automotive industry by 2030 [2]

However, the general public and investors still cast doubt on the prospect of AV technology [3]. One of the concerns is sensor reliability. Recently, an incident occurred when an autonomous vehicle plowed into an overturned truck on the highway; investigation later revealed that environment-induced failures in both the camera-based classifier and RaDAR-based system resulted in false negative detection of the overturned truck [4]. Without a reliable sensing module, any robotic system including AVs cannot function properly due to limited surrounding awareness.

In an attempt to solve this issue, ITRI has proposed a framework that uses an environment-based fusion technology to set up multi-modal sensors for AVs. The framework is comprised of multiple sensor types including (but not limited to) RGB camera(s), LiDAR(s), and RaDAR(s). Each type has specific characteristics and is sensitive to the surrounding environment in ways that can affect sensor performance (see Table 1). As a result, sensor reliability is dependent upon environmental conditions. For instance, LiDAR may provide more reliable data for its corresponding classifier compared to an RGB camera working in the night-time environment.

| Constraints | RGB Camera | LiDAR | RaDAR |

|---|---|---|---|

| Sensor Type | Passive | Active | Active |

| Lux Interference | Highly Sensitive | Not Sensitive | Not Sensitive |

| Sun-Exposure Interference | Highly Sensitive | Slightly Sensitive | Not Sensitive |

| Weather Interference | Mildly Sensitive | Highly Sensitive | Slightly Sensitive |

| Sensing Range | 50 Meters | 100 Meters | 150 Meters |

| Field of View | 60° | 360° | 30° |

| Resolution | Dense | Sparse | Highly Sparse |

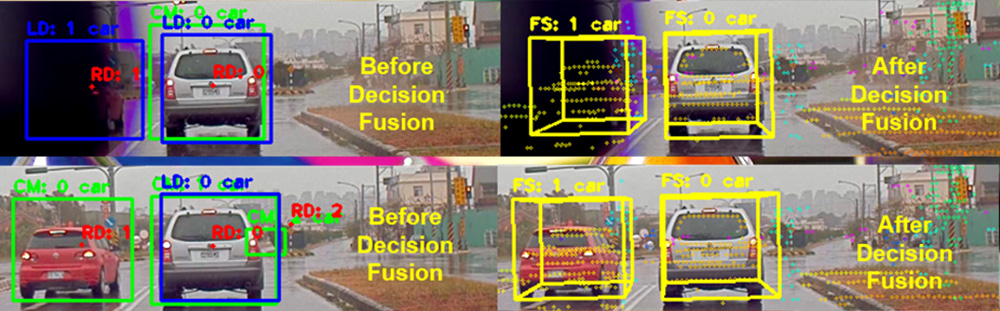

ITRI’s method known as Decision Fusion can be applied to the embodiment of a vehicle or robots with multiple on-board sensors (e.g. RGB Camera, RaDAR, and LiDAR) [5]. The technology provides overlapping field of view based on different sensor types and the surround-view of multiple sensors from each sensor type. The method begins with a data preprocessing technique. This synchronizes and rectifies the coordinate system of RGB camera(s), RaDAR(s) and LiDAR(s) into a unified center of coordinate. Once rectified, the sensor data are processed accordingly by the corresponding classifier of each sensor type to produce a set of meter-based 3D object detection boxes. Since there may be multiple sensors for each sensor type, a meter-based 3D intersection-over-union technique is used to combine the overlapping field of view from each type via bounding boxes. See Figure 1 below for the demo of Decision Fusion.

Figure 1. Demo of Decision Fusion (CM: Camera, LD: LiDAR, RD: RaDAR, FS: Decision Fusion)

Then, the bounding boxes used between different sensor types are compared in pairs. For instance, in the first stage, all LiDAR-based classifier detections are compared with those from the camera-based classifier. To perform this comparison, any LiDAR detection that overlaps with camera detection will be scored against one another based on seven criteria: 1) Time of day; 2) Local intensity; 3) Weather condition; 4) Light exposures; 5) Object distance; 6) Angular position; 7) Classifier confidence. Those detected with the highest scores based on these criteria are chosen as the result of this first comparison. On the other hand, LiDAR or camera detections without any pair are analyzed based on these seven criteria to determine whether or not it is a false positive. This comparison is later repeated between the results and RaDAR-based classifier. Table 2 shows the comparison of each sensor type’s classifier performance against the use of Decision Fusion. Note that the proposed Decision Fusion could improve RGB camera-, LiDAR- and RaDAR-based detection with accuracies of 9%, 6% and 11% respectively.

| Evaluation | Camera | LiDAR | RaDAR | Proposed |

|---|---|---|---|---|

| ACC | 87.0% | 90.1% | 84.8% | 96.0% |

| FNR | 5.6% | 4.5% | 7.1% | 2.0% |

Highlighted scores represent best results.

Through its environment-based multi-sensor fusion technology, ITRI aims to enhance the perception capability of AVs for more precise and reliable detection of road hazards regardless of the environmental conditions, thereby improving safety of self-driving.

References

- SIA, “Global Semiconductor Sales Increase 6.5% to $439 billion in 2020,” Semiconductor Industry Association. Available: https://www.semiconductors.org/global-semiconductor-sales-increase-6-5-to-439-billion-in-2020/. Accessed on April 2021.

- V. P. Gao, H.-W. Kaas, D. Mohr and D. Wee, “Automotive Revolution – Perspective Towards 2030,” McKinsey and Company. Available: https://www.mckinsey.com/industries/automotive-and-assembly/our-insights/disruptive-trends-that-will-transform-the-auto-industry/de-de. Accessed in April 2021.

- C. Tennant, S. Stares and S. Howard, “Public Discomfort at the Prospect of Autonomous Vehicles: Building on Previous Surveys to Measure Attitudes in 11 Countries,” in Transportation Research Part F: Traffic Psychology and Behaviour, vol. 64, pp. 98-118, July 2019.

- B. Templeton, “Tesla In Taiwan Crashes Directly Into Overturned Truck, Ignores Pedestrian, With Autopilot On,” Forbes. Available: https://www.forbes.com/sites/bradtempleton/2020/06/02/tesla-in-taiwan-crashes-directly-into-overturned-truck-ignores-pedestrian-with-autopilot-on/?sh=67eca3f358e5. Accessed in April 2021.

- P. Chondro and P.-J. Liang, “Object Detection System, Autonomous Vehicle using the Same, and Object Detection Method Thereof,” U.S. Patent 10,852,420, issued December 1, 2020.

About the Author

Peter Chondro is a senior engineer working for the Information and Communications Research Laboratories at ITRI. He received his B.Eng. degree in electrical engineering from Institut Teknologi Sepuluh Nopember (ITS), Indonesia in 2014 and his M.Sc. and PhD degrees in electronics and computer engineering from National Taiwan University of Science and Technology (NTUST), Taiwan in 2016 and 2018, respectively. His current research focuses on computer vision and machine learning for autonomous vehicles.